by Oliver L. Haimson

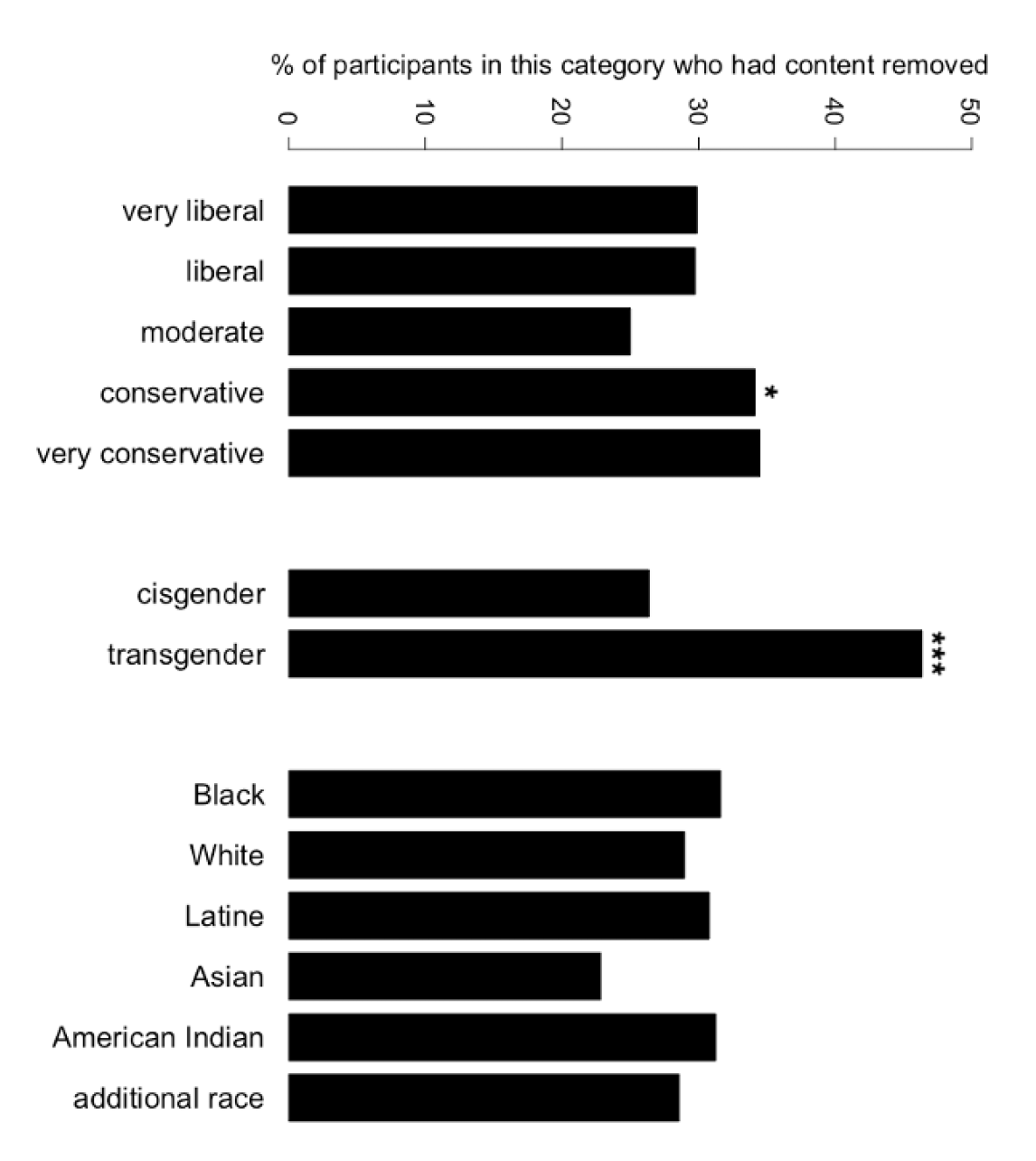

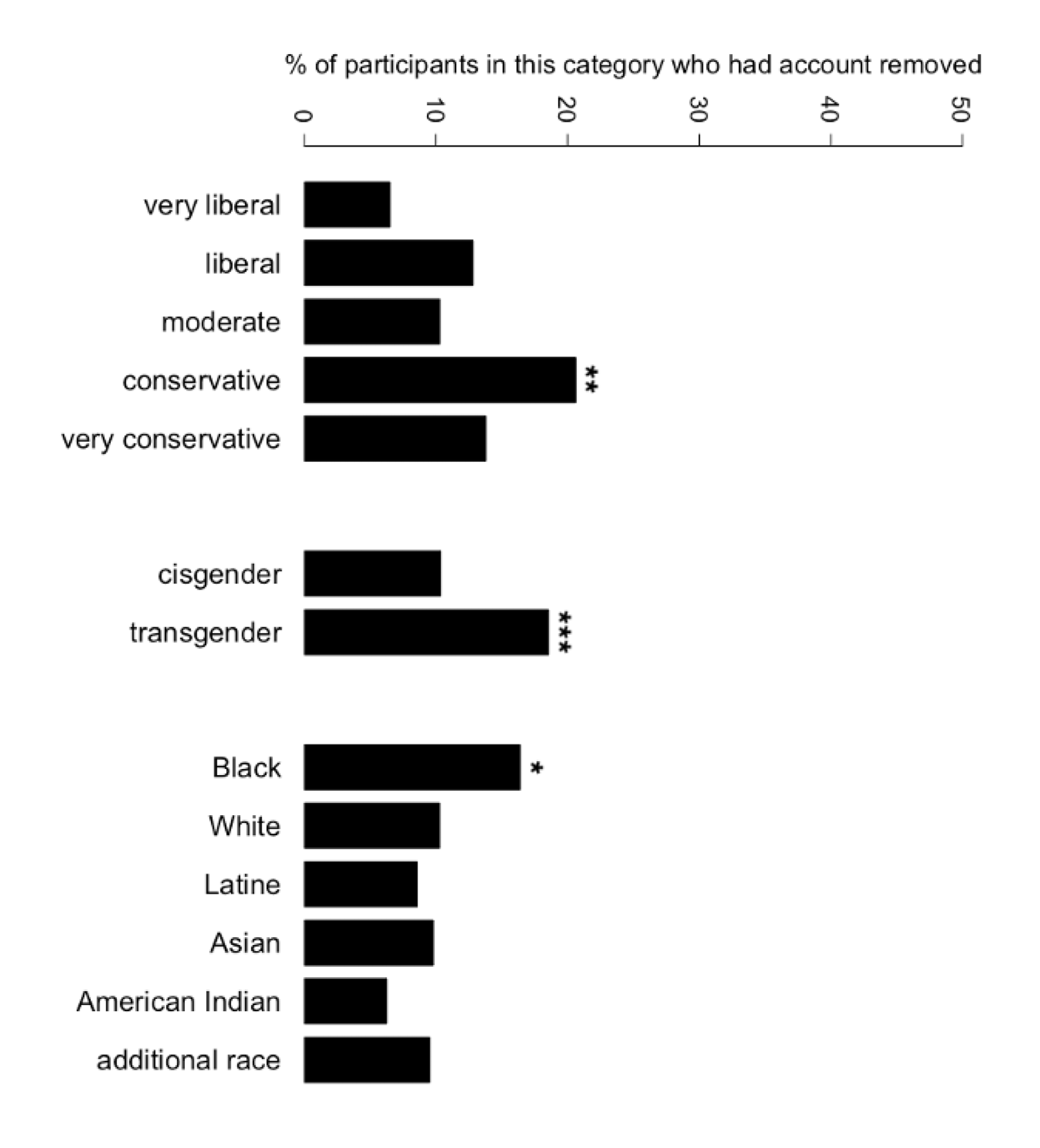

We often hear in the news about particular groups of people who face censorship on social media more than others – for instance, transgender users having their videos censored on TikTok, Black Facebook users barred from discussing their experiences with racism, and conservatives claiming that they are censored on social media. Salty’s own research has found evidence of bias against people of color and queer people in Instagram’s content moderation. But are these claims true on a large scale, and would they be supported by academic research? If so, how might social media censorship experiences relate to the types of content each of these groups post?

My research team at University of Michigan set out to answer these questions by studying which groups of social media users are more likely to have their content and accounts removed, what types of content is removed, and how this is different when considering marginalized users like trans and Black people vs. political conservatives. We conducted two surveys with hundreds of social media users to understand their experiences with content removals on social media.

We found that three groups are more likely to have content and accounts removed more often than others: political conservatives, trans people, and Black people. However, the types of content removed from each group, and the removals’ implications, are substantially different for marginalized groups as compared to conservatives.

Trans participants described having content removed that was mistakenly removed as “adult” despite following site guidelines, content that insulted or criticized a dominant group (e.g., transphobic people), and content related to their trans or queer identity. Black participants described instances having content removed that was related to racial justice, or descriptions of their experiences with racism. For both of these groups, censored content was related to participants’ marginalized identities and their desire to express those identities online. At the same time, this content usually either explicitly follows, or falls into gray areas, with respect to site policies.

On the other hand, conservative participants described having content removed that was offensive/inappropriate or allegedly so, Covid-related, misinformation, adult content, or hate speech. In these cases, social media sites had removed harmful content, which usually violated site policies, so that they could cultivate safe online spaces with accurate information.

“I was discussing anti-racism and my post got removed for hateful speech because I mentioned ‘white people’ in a ‘negative’ way.” – Black participant

It’s important to recognize that for trans and Black social media users, content removals often limit their ability to express themselves related to their marginalized identities, which limits their ability to participate in online public spaces. For conservative participants, on the other hand, content removals often enforce social media site policies that are designed to remove harmful and inaccurate content.

“I posted a selfie on Tumblr where I was not wearing a shirt, but had my chest covered by tape so that it appeared flat and no tissue was visible. Tumblr took it down despite it being allowed.” – Trans participant

These results show that popular press articles describing online censorship do have truth to them – however, the types of content removed from trans and Black participants is very different from the types of “censorship” conservatives face. One way that we describe marginalized people’s experiences with content moderation is as false positives and gray areas (see the figure below) – that is, sometimes social media policies fail to recognize the complexity of the contexts and scenarios marginalized people’s content falls into. One example is images of trans men who have had top surgery, yet still find that their nipples are considered “adult” and censored by social media sites.

One way that we describe marginalized people’s experiences with content moderation is as false positives and gray areasthat is, sometimes social media policies fail to recognize the complexity of the contexts and scenarios marginalized people’s content falls into.

Moving forward, there are several potential ways that social media sites can embrace content moderation gray areas to better support marginalized users. It might be the case that platforms and their moderation policies/procedures need to be completely restructured. But if we are going to work within existing systems, a few approaches can help marginalized people. First, sites can apply different moderation approaches for different online spaces depending on context. For example, some material may not be appropriate for one’s timeline, but is perfectly acceptable within a private group. Next, sites must work to develop specialized tools especially for particular marginalized groups. Finally, content moderation policies will improve if sites meaningfully involve marginalized communities in creating moderation policy.

By highlighting which groups are more likely to have content censored on social media sites and why, in this work we take first steps toward making social media content moderation more equitable.

*This essay summarizes a research paper that was co-authored by Daniel Delmonaco, Peipei Nie, and Andrea Wegner, which will be published in the journal Proceedings of the ACM Human-Computer Interaction and presented at the ACM Conference on Computer-Supported Cooperative Work and Social Computing in October 2021. This work was supported by the National Science Foundation grant #1942125.*

About the Author: Oliver Haimson is part of the Salty Algorithmic Bias Collective, and an Assistant Professor at University of Michigan School of Information. His research interests include trans technology and marginalized people’s experiences with social media content moderation.